Drone mapping has come a long way in the past decade, but it still faces major challenges.

GPS limitations, slow photogrammetry processing, and expensive LiDAR setups make accurate mapping a tedious process.

This is where SLAM technology is changing the game, by allowing drones (and other autonomous vehicles) to map their surroundings in real time while navigating them.

SLAM is opening the door to faster, smarter, and more autonomous drone operations.

But how does it work? What does this mean for the future of drone mapping? Let’s break it down.

What is SLAM Technology?

SLAM (Simultaneous Localization and Mapping) is a technology that enables a device such as a robot, drone, or autonomous vehicle to map an unknown environment while simultaneously tracking its position within that map.

It is a critical component of autonomous navigation, allowing machines to move and make decisions without relying on human intervention or any external positioning systems like GPS.

This mapping information is used to carry out tasks such as path planning and obstacle avoidance in various industries like automotive, aviation, maritime, and commercial drone flying.

How SLAM Technology Works

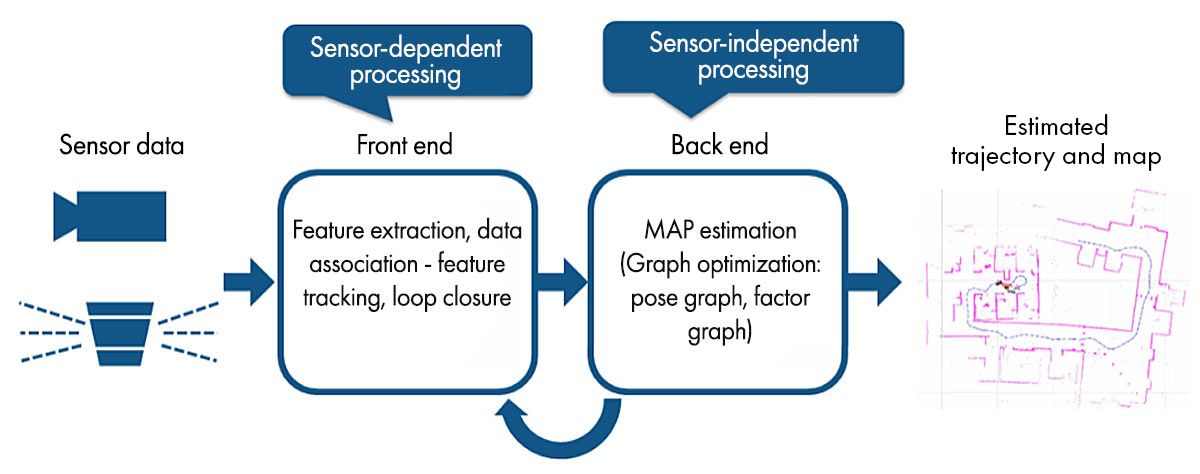

SLAM technology relies on a combination of sensors and intelligent algorithms to create real-time maps. Here’s how it works:

1. Gathering Data from the Environment

SLAM-equipped devices use a variety of sensors to scan their surroundings:

- Cameras (monocular, stereo, or RGB-D) capture images of the environment.

- Light sensors measure distances by bouncing laser beams off surfaces.

- IMUs (Inertial Measurement Units) detect motion, acceleration, and orientation.

- GPS & barometers (when available) provide additional reference points.

All these sensors work together to build a live 3D model of the area.

2. Detecting Key Features

Next, the SLAM algorithm identifies important details in the environment, like edges of buildings, textures on the ground, or unique objects like trees or lamp posts. These act as reference points to help the device understand its position.

For Example: Imagine you’re walking through a mall for the first time. You might remember a large fountain, a specific store sign, or an escalator to track where you are. SLAM does the same thing with visual and depth-based data.

3. Mapping the Area in Real-Time

Using the data from its sensors, the SLAM system builds a 2D or 3D map of the environment, all while the device is in motion.

Unlike photogrammetry, which needs time-consuming post-processing, SLAM maps the environment instantly and updates it in real time.

4. Localizing the Position

Now, this is where the real magic happens. While mapping, SLAM constantly recalculates the device’s position within the environment.

- If the device moves forward, it adjusts the map.

- If it tilts sideways, it updates its perspective.

- If it revisits a known area, it corrects errors and refines accuracy (This is also known as loop closure)

This means that even if GPS fails, the device still knows exactly where it is based on its surroundings.

Why SLAM Matters Today?

SLAM’s ability to map and navigate in real time is impressive, but it’s not a new concept.

It was originally developed in the 1980s and was first coined as an acronym in the 1995 paper ‘Localization of Autonomous Guided Vehicles’.

Here are some real-world examples of SLAM technology in action:

1. Robotic Vacuum Cleaners: If you’ve ever used a Roomba or any other smart vacuum, then you’ve already seen SLAM at work. These devices map your home in real time, detect furniture, and adjust their paths to avoid obstacles.

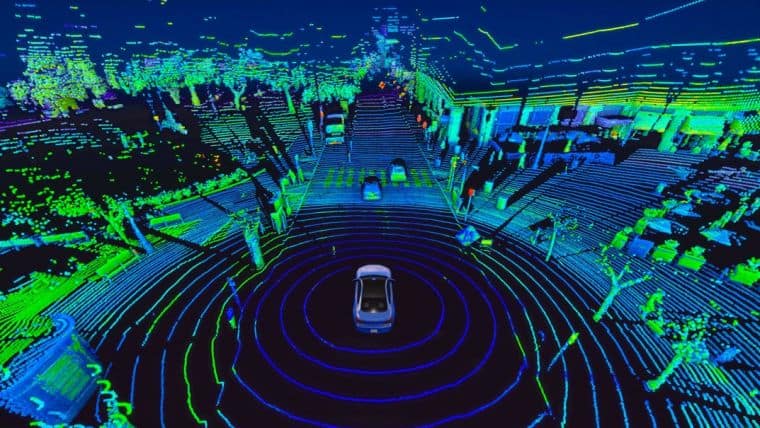

2. Autonomous Vehicles: Self-driving cars from companies like Tesla and Waymo use SLAM to navigate roads, detect pedestrians, and avoid obstacles – all while updating their environment map in real time.

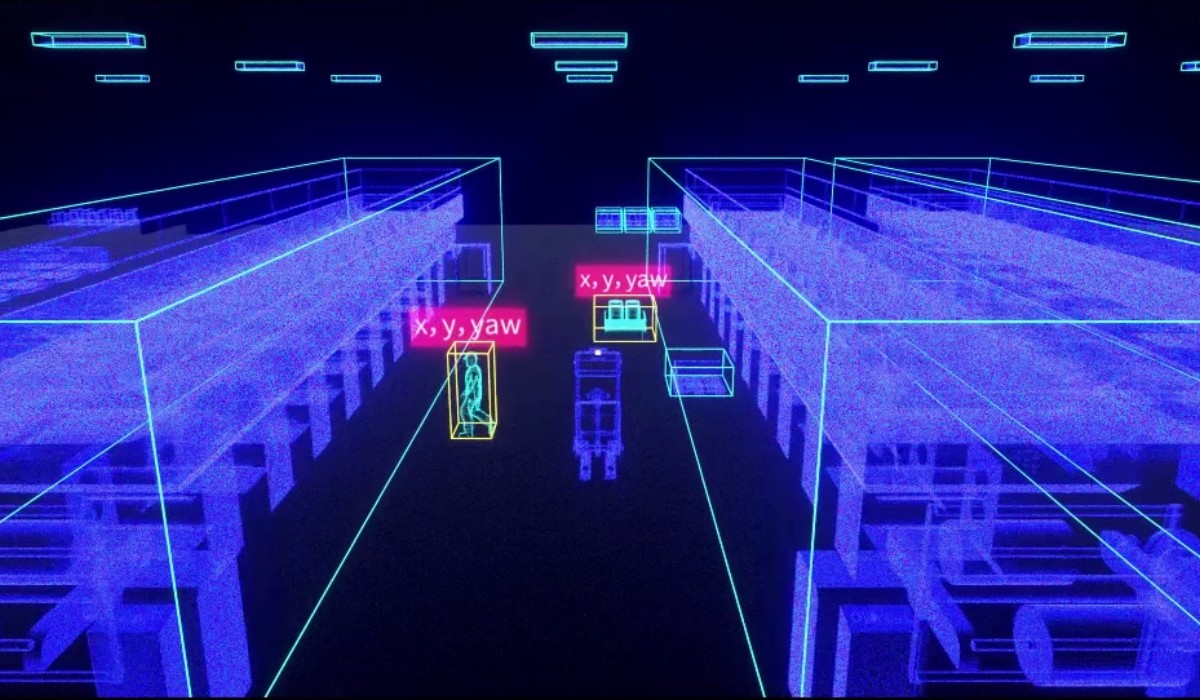

3. Indoor Warehouse Drones: Companies like Amazon and DJI use SLAM-powered drones to scan inventory, navigate shelves, and automate warehouse management.

So, what’s new about SLAM technology today?

Recent advancements in AI, deep learning, and sensor technology have made SLAM faster, more accurate, and more accessible than ever.

Let’s understand this better by analyzing the impact of SLAM technology on drone mapping.

How SLAM Technology is Transforming Drone Mapping

Drones are revolutionizing the way we map the world.

But to create accurate maps, they need to know exactly where they are at all times, and that’s where SLAM comes in.

SLAM technology helps drones map unknown areas while tracking their position in real time, all without GPS.

This is a game-changer for industries that depend on accurate 3D maps—construction, surveying, public safety, and even defense.

Let’s break down exactly how SLAM is transforming drone mapping and why it’s the future.

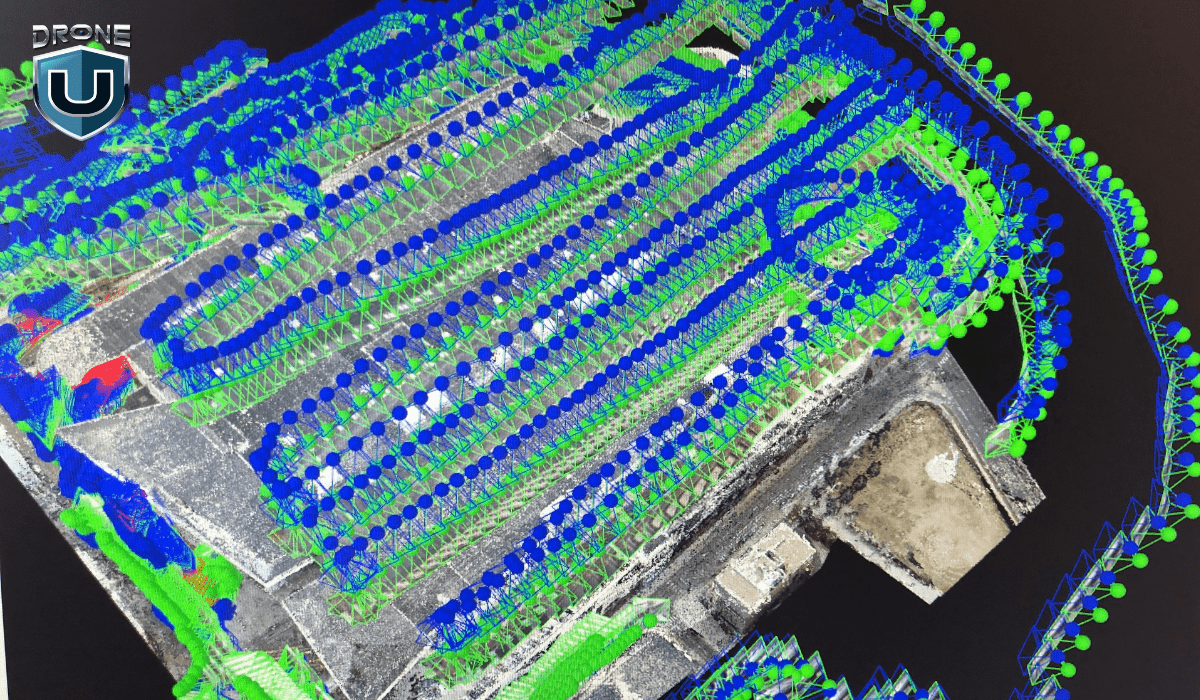

1. Real-Time 3D Mapping

Traditional drone mapping, especially photogrammetry, requires capturing hundreds (or thousands) of images and then stitching them together using software.

This process can take hours or even days before you get a usable 3D map.

But with SLAM technology, the mapping happens instantly as the drone flies.

Instead of waiting for post-processing, the drone’s sensors and onboard computing build a real-time 3D model of the area.

This means:

- Faster decision-making

- Live data for critical missions

- More efficient workflows without wasting any time

2. Works in GPS-Denied Environments

One of the biggest limitations of traditional drone mapping is its dependence on GPS.

In places where signals are weak, like underground tunnels, dense urban areas, or even forests, GPS-based mapping simply doesn’t work.

This is where SLAM technology shines since it doesn’t rely on GPS. Instead, it uses cameras and motion sensors to track its position while simultaneously mapping the environment.

3. More Accurate & Adaptive Navigation

Most traditional drone mapping methods rely on pre-planned flight paths. If conditions change, like unexpected obstacles, shifting terrains, or moving objects, the drone can’t adjust in real time.

SLAM fixes this by making drones more adaptive. The drone actively scans its environment and updates its map as it moves, meaning it can:

- Avoid unexpected obstacles

- Self-correct mapping errors

- Handle fast-changing environments

So, how exactly do drones use SLAM for mapping?

In the next section, let’s explore the different types of SLAM and how they shape drone mapping and 3D modelling.

Types of SLAM in Drone Mapping & 3D Reconstruction

Different drones use different types of SLAM depending on the environment, mapping accuracy needs, and available sensors.

Some SLAM systems rely on laser scanning, while others use camera-based navigation for more cost-effective implementation.

Let’s break down the three most common types of SLAM technologies that are commonly used in drone mapping today.

1. LiDAR SLAM

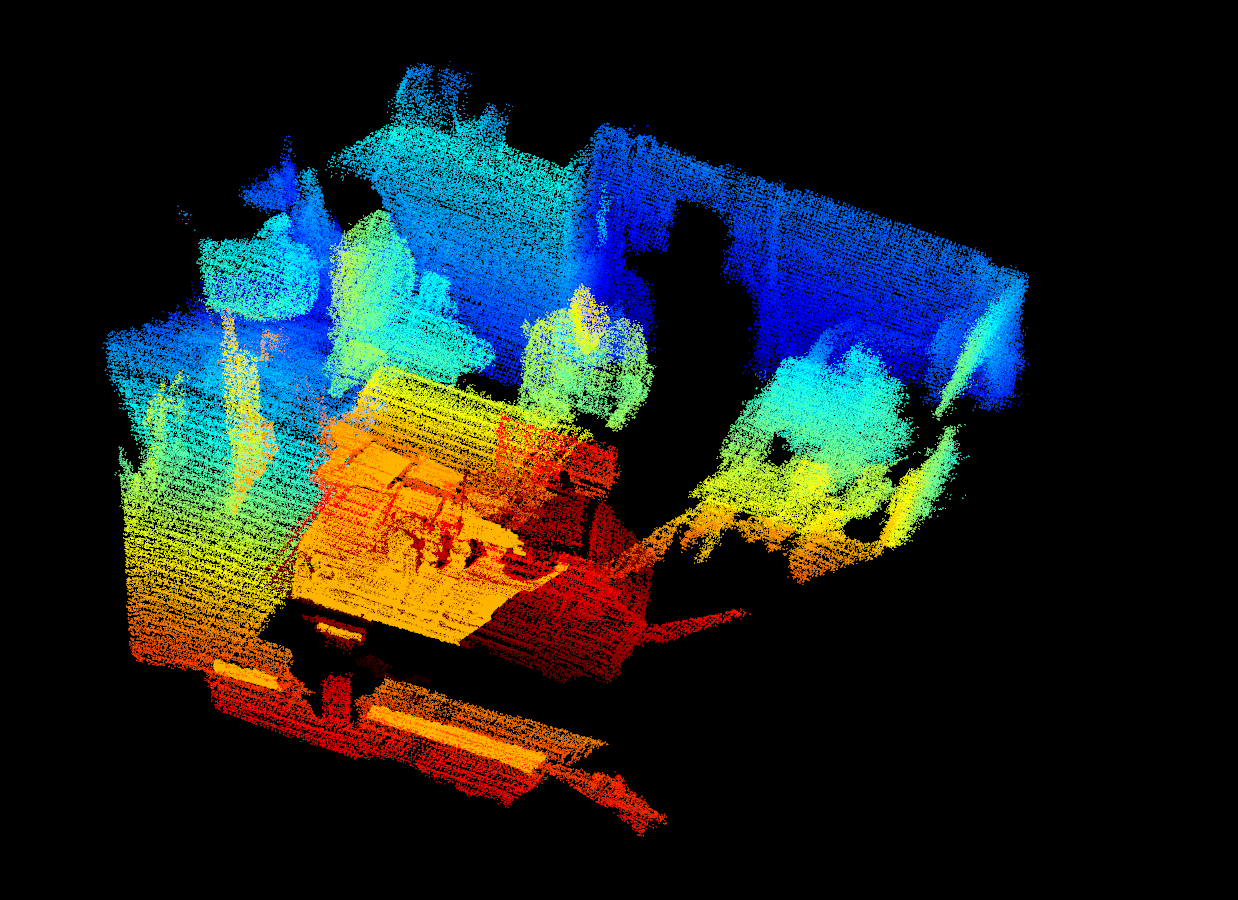

LiDAR SLAM is one of the most accurate and reliable mapping techniques for drones. It uses light detection and ranging sensors to scan the environment by sending out laser pulses and measuring how long they take to return.

How it works:

- The drone’s LiDAR sensor fires thousands (or millions) of laser pulses per second.

- These pulses bounce off objects and return to the sensor, measuring distance, depth, and shape.

- SLAM algorithms process this data to create a high-resolution 3D point cloud map in real time.

- The drone continuously updates its position while flying, ensuring precise navigation and mapping.

Advantages of LiDAR SLAM:

- Works in low-light and poor visibility conditions (nighttime, fog, smoke).

- Highly accurate and precise, even in complex environments.

- Can map large-scale environments quickly and in great detail.

Limitations:

- LiDAR sensors are costly compared to cameras.

- Requires more processing power than other SLAM methods.

2. Visual SLAM (V-SLAM)

Visual SLAM, also known as V-SLAM, relies on cameras instead of sensors to track movement and map the environment. It’s widely used in indoor navigation, AR/VR applications, and small drones.

How it works:

- The drone’s camera captures images of its surroundings in real-time.

- SLAM algorithms identify key features in the environment (edges, textures, objects).

- The drone tracks these features as it moves, estimating its position and orientation.

- A 2D or 3D map is generated using computer vision techniques.

Advantages of V-SLAM:

- More affordable than LiDAR-based systems.

- Works well indoors and in controlled environments.

- Lightweight sensors, making it ideal for small drones and consumer applications.

Limitations:

- Can be affected by motion blur and rapid drone movement.

- Struggles in low-light conditions (relies on camera visibility).

- Less accurate than LiDAR, especially in large outdoor environments.

3. RGB-D SLAM

RGB-D SLAM combines Visual SLAM with depth sensors, creating a more detailed and accurate 3D reconstruction than standard V-SLAM.

How It Works:

- The drone uses an RGB-D camera (which captures both color images and depth information).

- SLAM algorithms combine visual and depth data, creating a high-resolution 3D model.

- The drone uses depth perception to understand distances between objects, improving navigation and mapping accuracy.

Advantages of RGB-D SLAM:

- More accurate than standard Visual SLAM due to added depth perception.

- Lower cost than LiDAR, making it a budget-friendly alternative.

- Works well for indoor and close-range mapping applications.

Limitations:

- Less accurate than LiDAR SLAM for large-scale outdoor mapping.

- Requires good lighting conditions for accurate depth perception.

Each SLAM type has its own strengths and trade-offs, depending on the application:

So, which SLAM technology is best for drone mapping?

- If high accuracy and long-range mapping are needed, LiDAR SLAM is the best choice.

- If the goal is indoor mapping or lightweight applications, Visual SLAM is more practical.

- If you’re looking to balance between cost and 3D detail, RGB-D SLAM is ideal.

Next up, let’s explore how the emerging trends in SLAM technology are shaping the future of drone mapping.

Future Trends in SLAM Technology

SLAM has already transformed drone mapping and 3D reconstruction, but this is just the beginning. As AI, deep learning, and real-time processing advance, SLAM is evolving to be faster, smarter, and more autonomous than ever before.

From self-navigating drones to large-scale swarm mapping, the future of SLAM is full of exciting possibilities.

Let’s dive into key future trends shaping the next generation of drone mapping.

1. AI & Deep Learning in SLAM for Improved Automation

Traditional SLAM systems rely on feature detection and sensor fusion, but with deep learning, drones can:

- Recognize and classify objects in real time (e.g., roads, buildings, vehicles).

- Predict and avoid obstacles dynamically, improving autonomous navigation.

- Self-correct errors in mapping, making SLAM even more precise.

Neural networks can help SLAM algorithms learn from previous mapping patterns and improve future mapping accuracy.

2. Swarm SLAM

Imagine hundreds of drones working together to map an entire city in real time. That’s the goal of Swarm SLAM: where multiple drones share data, build maps collaboratively, and improve efficiency.

How Swarm SLAM works:

- Drones communicate with each other, exchanging real-time mapping data.

- Instead of each drone mapping its own area separately, they merge their data to build a single, large-scale 3D map.

- AI algorithms ensure drones don’t duplicate work, optimizing efficiency.

3. The MASt3R-SLAM Breakthrough

MASt3R-SLAM is a new AI-powered SLAM system that’s redefining real-time 3D reconstruction.

Unlike traditional SLAM, which requires calibrated cameras and fixed setups, MASt3R-SLAM works with any camera type, even uncalibrated drone footage.

Here’s what makes MASt3R-SLAM unique:

- It can handle different focal lengths, sensor sizes, and even zooming video footage.

- It can produce denser, more accurate 3D reconstructions.

- It runs at 15 FPS on a standard NVIDIA 4090 GPU.

This means,

- Drones can now create real-time maps from any camera footage (including live video).

- Great option for fast, plug-and-play drone mapping in unknown environments.

- No need for pre-calibrated cameras, simplifying setup and deployment.

Conclusion

From real-time 3D reconstruction to fully autonomous navigation, drones are now capable of mapping the world in ways once impossible.

As AI and deep learning advance, next-gen SLAM systems will push drone technology even further. Soon, drones will navigate without GPS, collaborate to map entire cities in real time, and seamlessly integrate into industries we never imagined.

SLAM is reshaping the future of drone mapping—the only question is, how fast will you adapt?

If you’re ready to stay ahead of this revolution, now’s the time to upgrade your drone flying skills.

Check out DroneU’s expert-led shooting & mapping course and start mastering the future trends of drone technology today!

FAQs

1. What is SLAM drone technology?

SLAM drone technology is used to map and navigate in real time without GPS. It uses cameras, light, and motion sensors to track the drone’s position while building a 3D model of its surroundings, making it ideal for GPS-denied environments like tunnels, forests, and dark areas.

2. How is SLAM different from photogrammetry?

Photogrammetry stitches multiple images together and requires post-processing, which makes it slower. SLAM creates maps in real time using sensors and cameras. SLAM is great for faster real-time applications, while photogrammetry is best for detailed, large-scale mapping.

3. What industries benefit the most from SLAM-based drones?

Industries like construction, surveying, search and rescue, agriculture, defense, and automobiles will benefit the most from SLAM-based drones.